Originally Presented as a Society for Simulation in Healthcare (SSH) Virtual Learning Lab

In this article, we are going to share some thoughts about artificial intelligence, its current capabilities and limitations, the risks and fears related to AI, and the building blocks of current AI systems from artificial neurons all the way to ChatGPT. Then we’ll review the challenges of integrating AI into simulation experiences, highlighting how we at PCS are tackling some of these challenges. Let’s get started

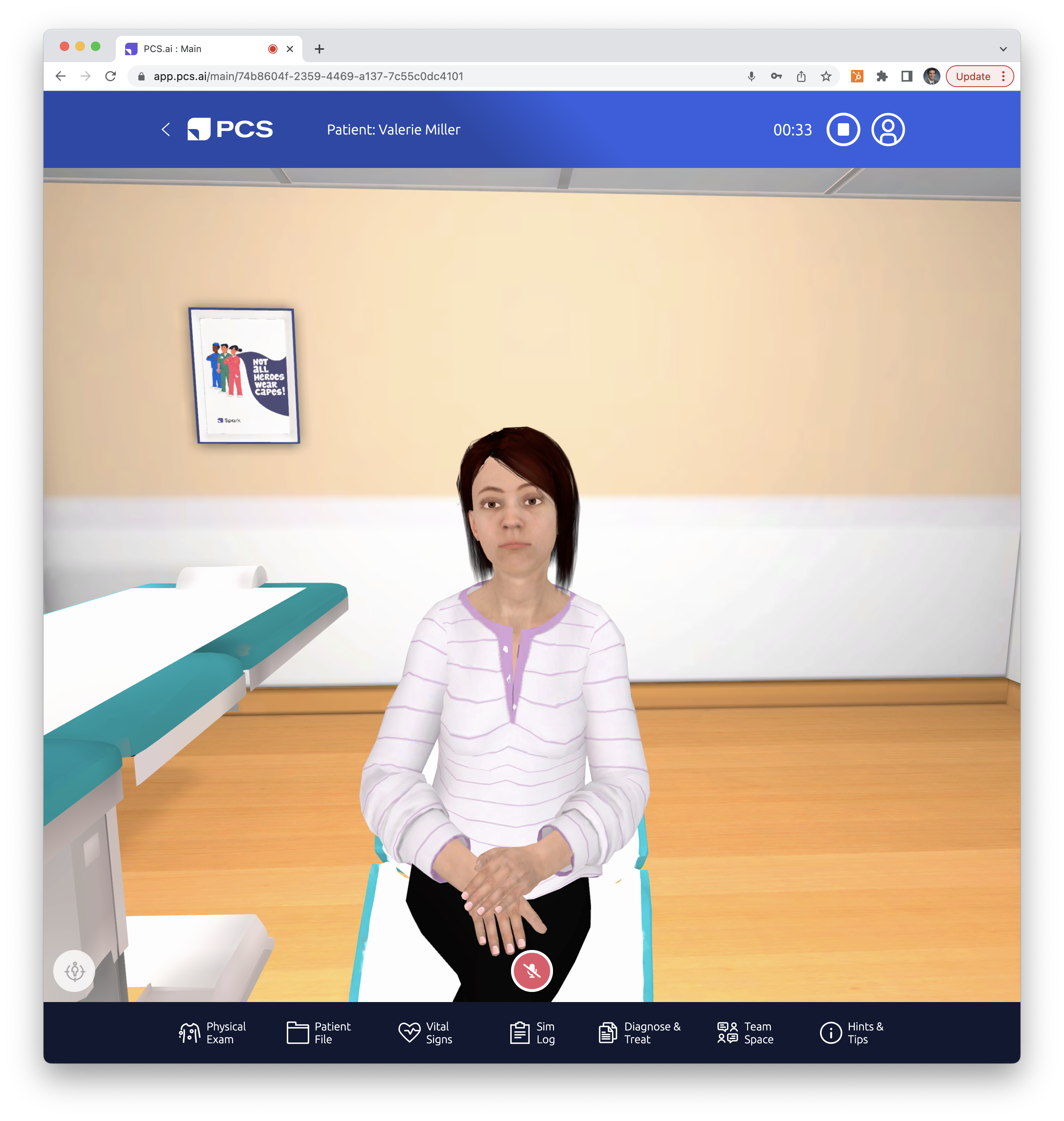

A screenshot of PCS Spark, the most intelligent digital patient platform, driven by Artificial Intelligence.

A screenshot of PCS Spark, the most intelligent digital patient platform, driven by Artificial Intelligence.

First, let’s define what intelligence is: the ability to perceive, synthesize and process information, including but not limited to the capacity for abstraction, logic, learning, reasoning, creativity, and problem solving. So basically, that’s being smart, the very thing that makes us human and differentiates us from all other living things around us. Many species show various levels of intelligence, but nothing like our abstract ideas that enabled us to go to the Moon and build complex electronic computers.

Artificial Intelligence is intelligence demonstrated by the machines we build. The most complex machines humans ever built are today’s electronic computers, so naturally, Artificial Intelligence is being developed utilizing these extremely fast and generalized number-crunching machines.

It is worth mentioning that as the capabilities of AI improve, we constantly re-classify the more mundane capabilities to no longer be AI, just “things that our machines do”. In 2023, Face Unlock and image labeling are no longer seen as real AI, although they were on the leading edge of AI development just a few years ago. This continuous “moving of the goalposts” is known as the “AI effect”.

Among all AIs, we can differentiate between narrow and general AI. Narrow AI systems are specialized forms of intelligence rapidly taking over various information-processing functions that were previously limited to humans only, but they are all limited to very specific forms of intelligence. Some of these AIs are designed for speech recognition, image processing, playing games, or driving cars.

On the other hand, AGI (Artificial General Intelligence) is a machine that demonstrates human-level cognitive performance in virtually all domains of interest.

Well, the answer is not really as clear-cut today as it would have been just a year ago.

This is because GPT-4 by OpenAI shows best-of-humans level mastery of language. It can write essays, poems, and translate to a different language, tone, style, or format. It is pretty good at complex math problems (but not superhuman when it comes to logic), great at coding (after all, that’s just another language with different rules so that really comes naturally to GPT-4). With that said, GPT-4 often hallucinates (makes up things and states them as facts) and insists on surprisingly dumb statements. Finally, its ability to produce new knowledge, i.e., to develop information we did not have before, in particular in the fields of science, and more generally, about our understanding of the world around us, is very limited.

Having said that, current state-of-the-art AI systems achieved an amazing and unexpected level of intelligence that all experts in the field anticipated for much much later in the 21st century, if ever. This is definitely a major breakthrough with huge implications for our society in the coming years, not decades. Having access to human-level, generic intelligence at a very low cost is going to make many things affordable that were uneconomical in the past. Transforming what we humans may want and get, similarly how microelectronics transformed the abilities of our machines. Except this transformation is going to happen much much faster as it is entirely driven by software, which is relatively easy to change quickly, partially thanks to the help of these very AI systems!

Today it seems likely that true AGI is going to be possible with the current technology, given some “small breakthroughs”, and maybe another magnitude of increase in computing power dedicated to it.

So we’ll have an AI that is as smart as all of us. If we humans can, together, improve AI up to that point, there is no reason to believe that AI cannot further improve itself, with or without our assistance. Actually, since it just surpassed human capability it is likely to be able to increase its pace of progress. This means that thanks to this feedback loop, this AGI is going to become an Artificial Super Intelligence and it will get there surprisingly fast - that’s the nature of an exponential feedback loop.

So, if things progress in the direction that we are currently marching on, we’ll soon share this planet with something that is vastly more intelligent than all of us combined. So, what’s it going to do with us? Well, it depends on its priorities, objectives, and how they are aligned with the interests of humanity. This is called the Alignment Problem.

It seems to be extremely difficult both to design a universal alignment function (i.e., what do we want the AI to consider important to improve and optimize for) as well as at the time ensure that the AI (which is as superior to us intellectually as we are to, say, ants) closely adheres to this alignment function, regardless the self-improvements it executes.

To make things even more alarming, the Alignment Problem is unlike any other scientific challenge humanity has faced before. The typical modus operandi of science is try-fail-try-fail-try-succeed. However, this time we must get AI alignment right the very first time we create a super intelligence. If we mess up and the Superintelligence decides to, let’s say, eliminate all human suffering by eliminating all humans, we won’t get a second chance to explain that’s not how we meant that.

To present a glimmer of hope: some experts believe that our best bet is NOT explicitly sharing our goals with the AI as any one of us sees them today, but rather to program the AI to strive for continuously improve in its understanding and empathy for goals and priorities of all humans, to endlessly improve its own infinitely complex compound objectives.

So, on one hand, we should take it slow and we should not rush into building something we cannot control, nor understand. On the other hand, we need to hurry and we need to be the first to build an AGI. Why? Imagine if bad actors get there first - a superintelligence in malicious hands could permanently shift the balance of power. Also, the first AGI could have the power to make developing later, competing and independent AGIs impossible.

Enough of the philosophical contemplating on what the future may bring, let’s see what we’ve got today and how it works in a nutshell.

We’ll start from the bottom and build up all the way to ChatGPT.

Everything starts with the artificial neuron. This element can be seen as a digital simulation of a biological neuron. This neuron takes multiple inputs on its simulated “dendrites”, calculates a weighted sum of them, possess the result through a transfer function and outputs the result on its “digital axon”.

So, it is a simple number cruncher that turns many inputs into an output based on a number of parameters describing its weighting and transfer functions.

One neuron is not very useful on its own. Researchers started connecting these digital neurons into networks very early on. In these networks, the output of some neurons becomes one of the input of other neurons. Now, these networks can be trained by adjusting the weights and the transfer functions of the individual neurons to better. When we start making these adjustments, we don’t know how these changes are going to affect the outputs considering a particular input, but we can try over and over until we get the desired output in relation to the input.

Let’s see an example. Let’s say we are building an image labeling AI, one that can automatically distinguish between images of various animals, and label them properly. Our goal is to create an AI that can label correctly not only the images it has seen before, but also images it sees for the very first time. This requires the ability to generalize, i.e., to see in a picture of an elephant, the very “elephantness” of it.

So, we grab a large group of neurons, connect them, set their parameters to something, anything, and start feeding this network an extremely large number of pictures of various animals. We keep count of the number of times this network labeled correctly and incorrectly. Then we adjust the parameters (just tweak them a bit), and try again. If this tweak improved the results, i.e., it is now correct more often than before, then we continue tweaking the parameters in this direction.

This takes an enormous number of attempts: we’ll need to run these training cycles involving testing, adjusting and re-testing over and over. This is especially true if we start with a reasonably large network of neurons randomly connected as there are a huge number of parameters to adjust over a wide range of values. So, how could we make this more efficient? By structuring our artificial neural network in a way that somewhat mimics the functional organization of biological neural networks: more complex functions driven by the outputs of less complex functions. This sort of builds multiple layers of networks, each feeding its output into the one above it, creating a hierarchy of networks, which can be trained separately. This system now has depth to it - yes, this is the essence of Deep Learning, the structuring paradigm behind most modern AI systems.

A certain kind of Deep Learning model is the Transformer. It is designed to process a sequence of inputs (say, a list of words), but it has the unique ability to process all inputs concurrently, which makes the training process much more efficient. This enabled researchers to scale up these models substantially, creating models with billions of parameters. From the entire sequence of inputs, it has the ability to grab relative importance and context, making this model especially useful in Natural Language Processing applications. Actually, the T in GPT stands for Transformer.

And now we have arrived at “GPT”, the Generative Pre-trained Transformer. A large language model that truly masters human language generation because it has been pre-trained on much of all the text ever created in the age of the internet, about a trillion letters of it to be precise.

These modern AI systems do the first part of their training unsupervised by using a vast amount of training data, and learning the internal structure and element distribution of it all. They automatically discover the underlying rules that one needs to understand to continue a conversation. This is absolutely necessary for the extremely large amount of training these huge systems need to do, there’s no way we could build an army of human trainers to overview every step of this learning process. However, this is not enough. Once we have a system that can generate outputs that match the rules of the training data sets, humans are absolutely necessary to fine-tune their training using Reinforcement Learning from Human Feedback (RLHF). Essentially, the AI gives a few output options, and the human agents pick the best one. This feedback is funneled back into the AI through a continuously adjusted “reward function”, enabling the system to learn from the feedback.

This brings us to where we are in 2023 with state of the art Artificial Intelligence. What can we take from that and apply to AI in healthcare simulation?

AI in Simulation

There are multiple areas of functionality in human patient simulation that can be improved with AI. Some of the most relevant ones might be patient communication, physiological modeling of complex systems, and automated assessment of learner actions. In 2023, it would be a missed opportunity to use a simulation platform that does not provide sufficient capabilities along all three of these dimensions, all of which can be AI-supported. Having the assistance of AI is great and the benefits are often discussed; however, the risks of implementing AI-enabled patient simulation are often overlooked. Let’s look at a few of those and analyze our expectations with regards to our simulation experiences.

You want your simulation to realistically simulate human patients, and being able to communicate with a simulator drastically increases the realism. Your simulator needs to talk like a patient, not like an AI assistant. You don’t need a simulator that can talk via ChatGPT, you need a simulator that has an AI-brain that was trained to act like a patient meeting healthcare professionals, all the time, every time. This training process requires a huge amount of patient interaction based training data. There is a substantial snowball effect at play: more simulated interactions make the system better, making it a more attractive choice to an increasing number of learners, further accelerating the growth of this data set.

To achieve the educational objectives of simulation, as well as to validate achieving those objectives, simulations must be reproducible. The patient has to behave identically for all learners who experience this simulation in every relevant way. Slight variations in questioning the patient should not trigger substantially different responses.We need consistency and some sort of standardization to facilitate the learning process, and to maintain measurability of learner performance. Some of the most advanced AI models are not designed for this and often “improvise”, which could negatively impact learning outcomes. Your AI-enabled simulator has to be designed to ensure this consistency, and only risk delivering AI “hallucinations” in inconsequential situations, i.e., to answer medically irrelevant questions.

Your simulators must be reliable. They have to work all the time, with a consistent response time, regardless of the current load on some AI service. This is hard to guarantee with some of the bleeding edge AI services: they are actually surprisingly unreliable. During certain times of the day for example, ChatGPT may become unavailable due to the high server load from thousands of other users trying to access the service. However, a well-designed system can have intelligent fallbacks, also known as graceful degradation, so the system performs only slightly worse in lack of the particular AI service, but it does not completely stop.

PCS started building AI-enabled simulators more than seven years ago. When we embraced AI, there was no other simulator company even considering the benefits of using AI in healthcare simulation. Things have certainly changed as of late, and we see more and more simulation companies talking about AI. It is easy to underestimate the difficulty of building an AI-driven product and confuse that with building an AI-enabled product. What makes PCS Spark unique in 2023? Instead of snapping on an AI to support a certain function of an existing product, Spark was designed from the ground up to be an AI-driven simulation platform. This, combined with years of training data collected from hundreds of thousands of simulation experiences enabled us to build the smartest AI-driven digital patients in the world.

In recent years, AI has evolved at a neck-breaking pace, far surpassing the expectations of… anybody, really. All of this progress is a result of the fact that artificial neural networks based in deep learning could be taken further than anticipated. We don’t know how much further this paradigm can go, but it is already at a useful stage in many areas of life, including patient simulation. Patient Simulators can become more realistic and cost-efficient as a result of being AI-driven. PCS Spark is the amalgamation of multiple AI systems, enabling a uniquely intelligent way to simulate.

If you made it this far in this article, consider getting in touch with us! Visit ww.pcs.ai, email us at info@pcs.ai to learn more about AI in simulation and PCS Spark, the AI-driven digital patient platform.